Day 1 : Creating an AR App for iOS using Unity and ARKit

The purpose of this tutorial is to help you understand the fundamentals of ARKit and Augmented Reality, we use Unity – a cross-platform and simply amazing game engine to build out an ARKit app.

When Augmented Reality was introduced to the masses in 2017 by apple through iOS 11, in the form of ARKit, it was the largest AR capable platform in the world with a few millions of iPhones and iPads supporting it. The possibilities seemed endless and now a few years later, the tech has matured into a very stable platform and there are at least a hundred million apple devices out there which are AR capable. If you are interested in leveraging AR’s capabilities and building apps for iOS then you are at the right place.

With this tutorial, you will learn the technology, the process, and understand how ARKit works on a real device and use plane detection to identify and track vertical and horizontal planes. We will get to more advanced topics and build out more complicated applications as we progress through them, but first let’s understand the very fundamentals and that is what this tutorial will aim to do.

This is part of a 30 day sprint where we try to publish 30 projects in 30 days, this means building full projects from scratch. Double checking the code, writing the tutorial and then posting it . If there are any typos do let us know and I hope you enjoy this tutorial and project.

This is part of a 30 day sprint where we try to publish 30 projects in 30 days, this means building full projects from scratch. Double checking the code, writing the tutorial and then posting it . If there are any typos do let us know and I hope you enjoy this tutorial and project.

Pre-requisites

I recommend that you have a basic understanding of Unity and development in General.

To run and test our ARKit App, we will an ARKit compatible iOS device, which will be anything from iPhone 6s and above. If you wish to follow along you’ll need the following.

- macOS Catalina

- Unity 2019.2.18f1

- Visual Studio for Mac

- Xcode 11.3

- An iOS device

- Apple developer account (can be a free account)

Once you have downloaded Unity and the other tools mentioned above and have everything we can dive right in.

Final Goal to be achieved

Our goal will be to create and deploy an AR application to our iOS device. This is what the end result will look like.

Before we start let’s do the customary and ask What is AR?

In a nutshell, Augmented reality is the use of technology to superimpose any kind of visual or audible information on the real world as we see. Picture the “Iron Man” style of interactivity.

Now then, What is ARKit?

ARKit is a high level augmented reality development framework that leverages the computing power on the highly efficient yet powerful iOS devices and their cameras that allows developers to create AR Apps and Experiences.

Where does Unity fit into this?

Unity is a cross-platform Game Engine. If you have played any game on either your android or iPhone, chances are high that it was probably made using Unity. Since it is a game engine, it has by design a workflow that is 3D-centric and intuitive (If you have worked with SceneKit on iOS you will know what I mean). Hence it became a natural choice for Apple and was among the first to come with ARKit SDK support. If you want to know more about Unity, there are plenty of other excellent sources out there, but for the sake of this tutorial I’m assuming that you already have a basic understanding of Unity or at the least have gone through what Unity offers. Don’t worry if you are not well-versed with it, as I will explain stuff as we go along.

I’ll be dividing up the tutorial into these sections

- Setting up Unity to work with AR

- Creating our AR Scene

- Building the App with Unity

- Deploying to an iOS Device with XCode

Setting up Unity for AR development

Creating Project and Configuring Platform

Using Unity 2019.2.18f, create a new project and name it to your liking. You should be using Unity Hub as it makes project management much easier.Once a new project is created by Unity and you’re in the Unity Window by default your primary platform for the application will be set to Standalone. We need to switch it to iOS. To do this from the

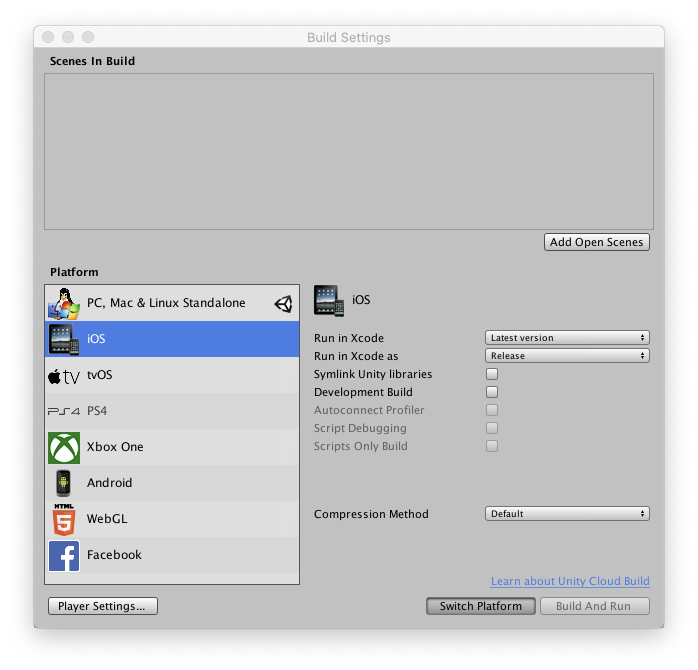

Menu Bar -> File -> Build Settings.

A new window will come up. On its left, there will be a list of platforms to select from. Select iOS and on the right side there will be a button “Switch Platform”. Once you click that, unity will reload the project for iOS and it might take a couple of seconds. If you don’t have the iOS platform installed, there will be an option to install it once you select your platform.

Next, we will add the scene we want to be included in our build. We can always add a scene later but we can do it right now as Unity creates a default scene called “Sample scene” which we’ll be using in this case. On the top portion of the Build Settings window, you will see the list of scenes, which is empty now, under Scenes in Build. Click on Add Open Scenes button which is right below which will the currently open SampleScene to it.

Importing SDK’s and Tools

Back when ARKit was launched it had a standalone SDK for Unity which is now Deprecated. Now we have a cross-platform augmented reality Wrapper for working with different Core SDK’s in this case ARKit is our Core SDK.

AR Foundation provides a unified system and API’s to interact with our underlying AR Engine. We’ll look at AR Foundation in-depth in a separate tutorial. For our current requirements, we’ll need to install both AR Foundation and ARkit for our application. We will be working majorly with AR Foundation which will then interface with ARKit by itself. Since these have first-party support from Unity their installation is super simple and they can be found under Package Manager.

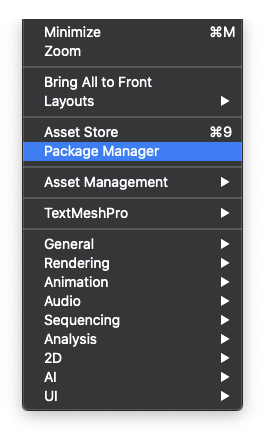

Window -> Package Manager.

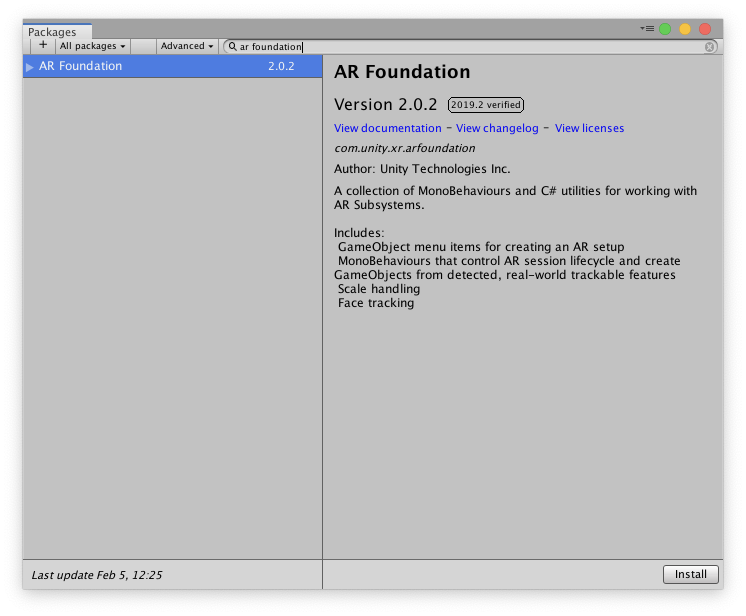

In the search bar type “ar foundation” and it should show the latest AR Foundation SDK available for the current version of Unity. The current version used is 2.0.2. Select AR Foundation from the list and click on Install.

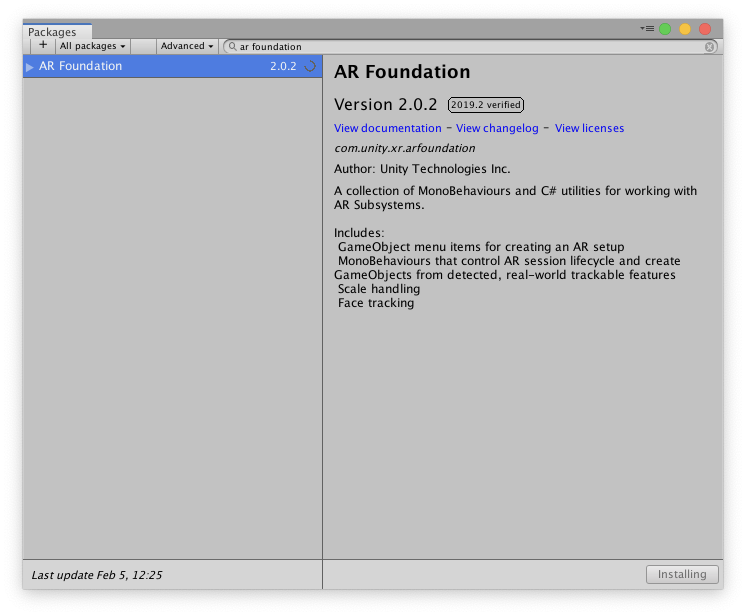

Unity will now begin to download the package and will start installing it. It might take a minute or two until it is installed and imported into our project.

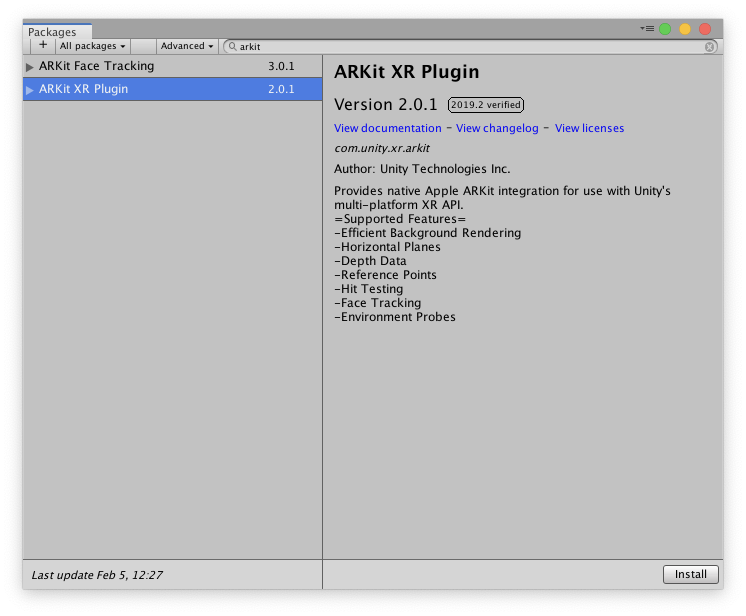

Next, we import the ARKit SDK. In the same Package Manager window search for ARkit. It should show a few results but what we’re interested in is the ARKit XR Plugin, version 2.0.1 is what I’m using. Install it, and unity will start downloading and import it into our project.

That’s all we need to create our AR Scene which we’ll do next!

Creating the AR scene

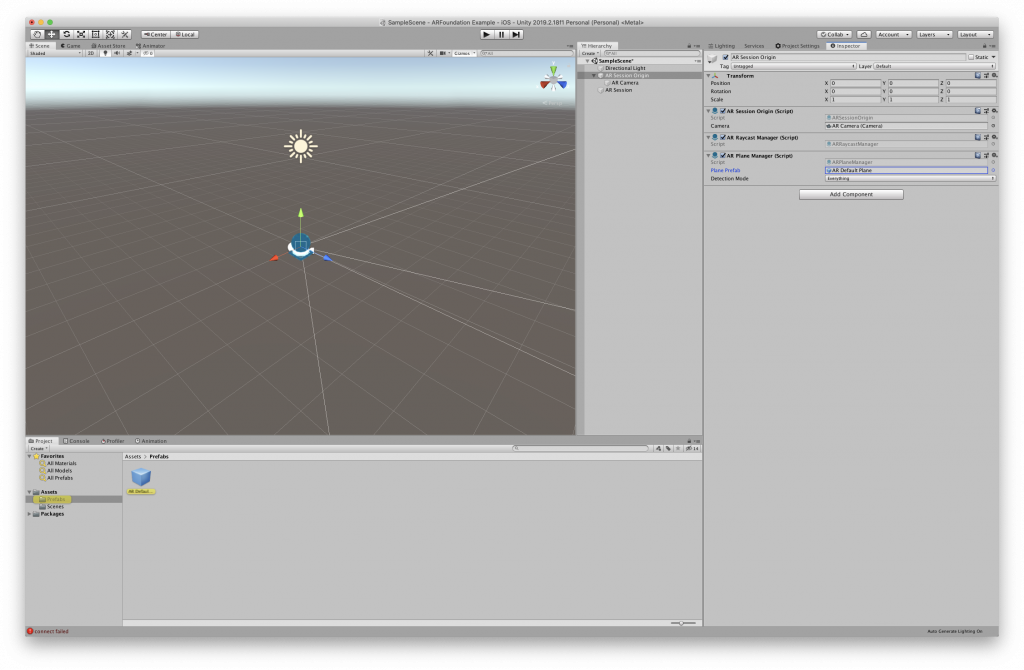

Creating AR Cameras and AR Session Objects

Unity by default creates a MainCamera for us in the scene. Although this could be used as an AR Camera, we will replace it with the AR Foundations camera setup. We will then have much more control of our AR Experience.

This is how it actually works –

When we want to start any AR app it basically is called an AR Session, all the initialization of the Camera, tracking, etc, It starts with an AR Session. Using AR Foundation we can manually start, pause or stop a session, and do much much more which we’ll see in later tutorials.

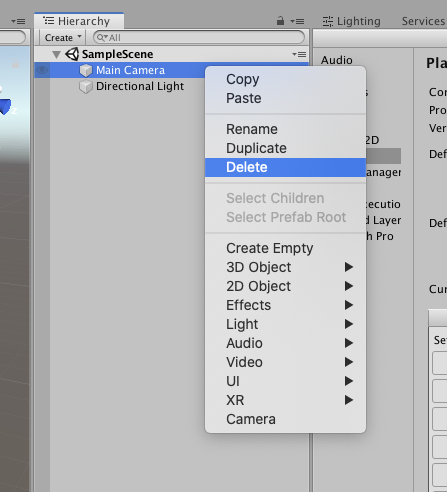

Delete the MainCamera gameobject from the Hierarchy window.

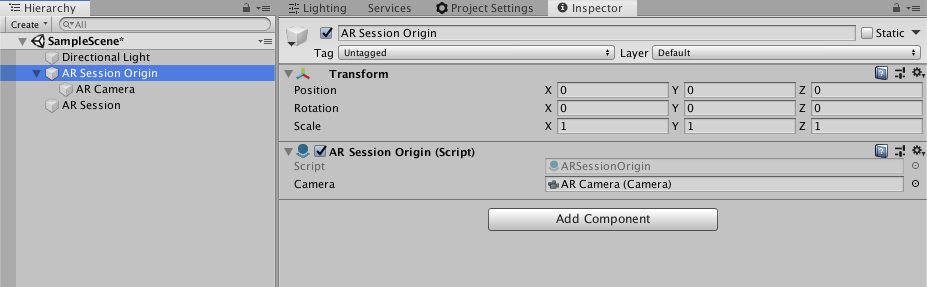

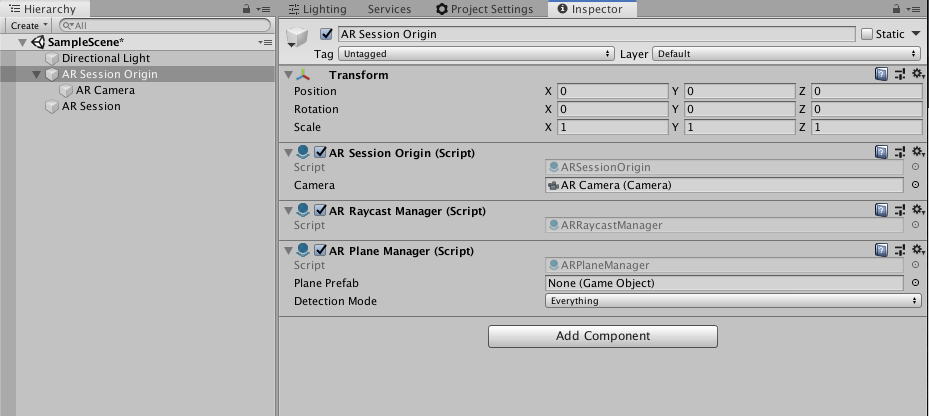

We’ll add the AR Foundation Camera by right click on the Hierarchy window -> XR -> AR Session Origin. A new Game Object will be created which will have an AR Camera Game Object as its child. This will be the source of our AR Session.

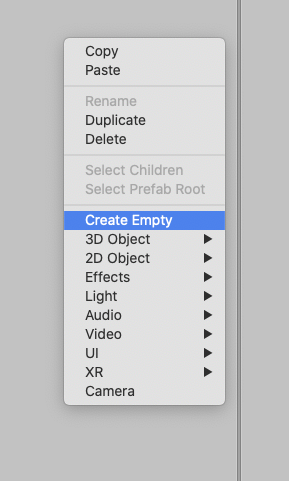

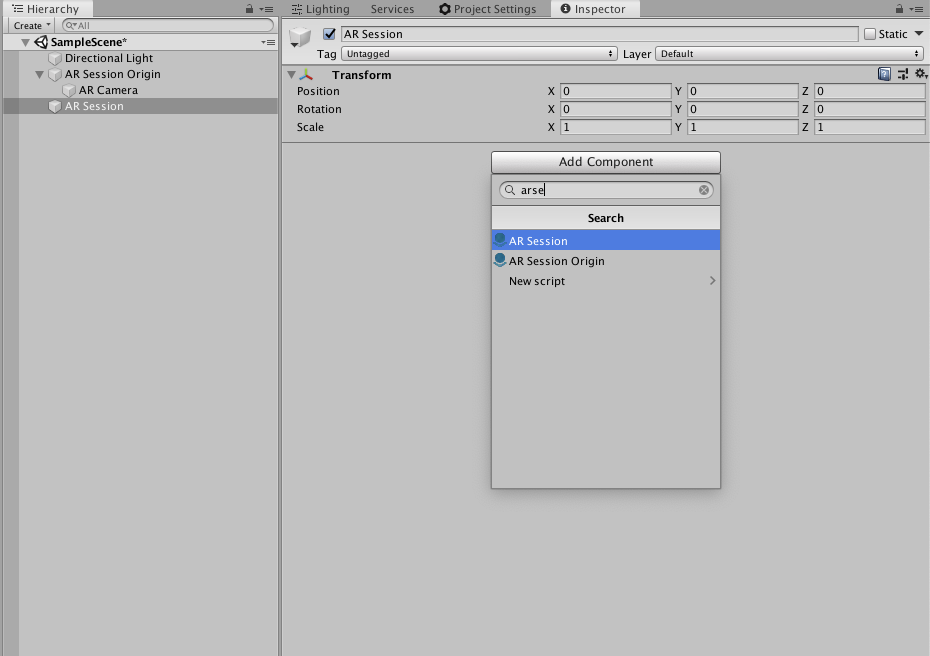

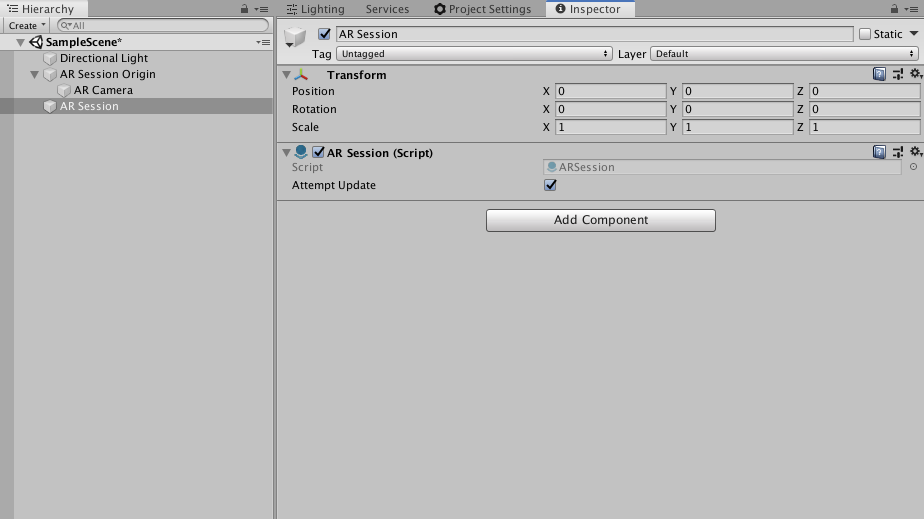

Next, we add an AR Session component into our Scene. We first create a new gameobject by right click on Hierarchy -> Create Empty and rename the created gameobject to ARSession.

Select the ARSession gameobject and from the Inspector window -> Add Component -and search for AR Session and select it.

Creating Raycasting and Plane Detection Objects

A Ray is basically an invisible line that is cast from a point in a specified direction. Raycasting is a concept of creating a ray which is then used to check if the ray hit anything. This information is useful to detect if the ray has hit a 3D object or a 2D element etc., In Unity, raycasting is also used for UI interactions using the graphic raycaster component. But for our AR application, we will use a different raycaster called an AR Raycaster using the AR Raycast Manager component.

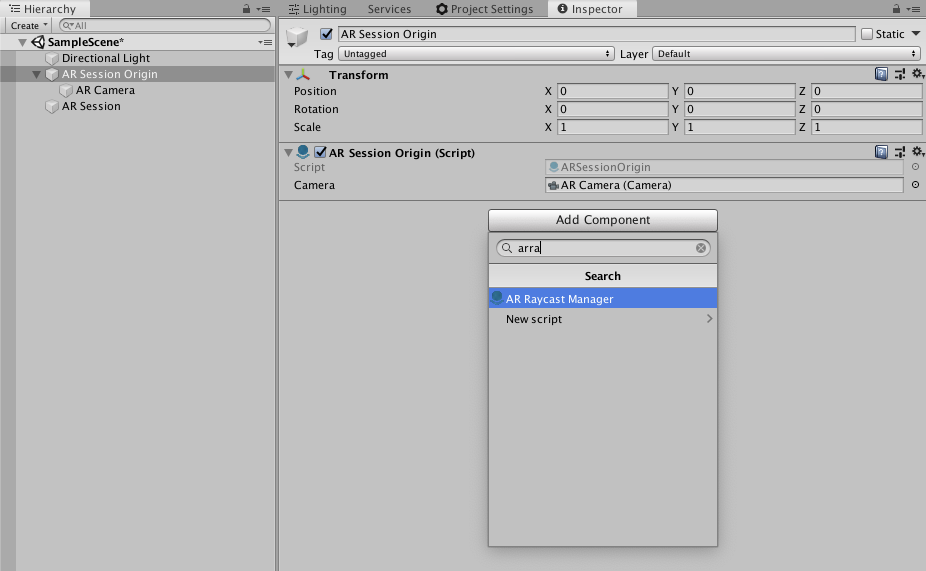

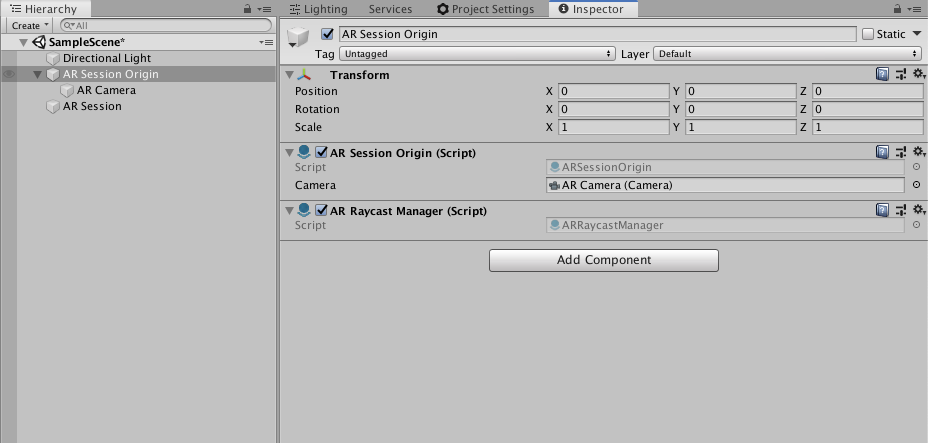

We will add the AR Raycast Manager component to our AR Session Origin as the raycaster requires the AR Camera and the Session Origin Components to work. Select the AR Session Origin -> Add Component -> AR Raycast Manager.

Finally, we can get to our Plane Detection objects!

Plane Detection Objects

Plane detection is the process of detecting both vertical and horizontal planes in our environment. Again, we’ll leverage AR Foundation to create the required objects that perform plane detection in our AR App. This is achieved using the AR Plane Manager component which is added to the AR Session Origin gameobject.

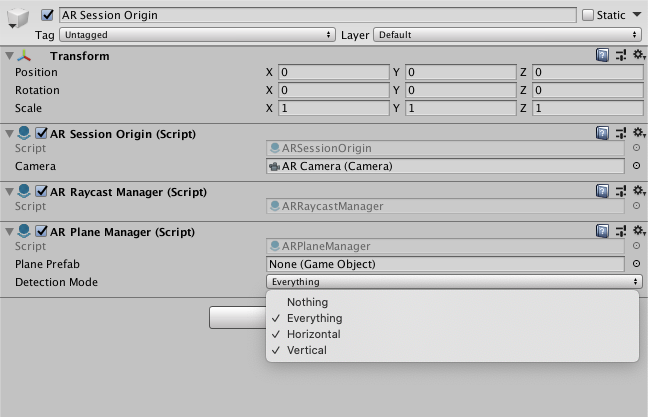

It can be configured to detect any specific type of plane, but now we need all the Types available which can be selected from the Detection Mode field of the AR Plane Manager component.

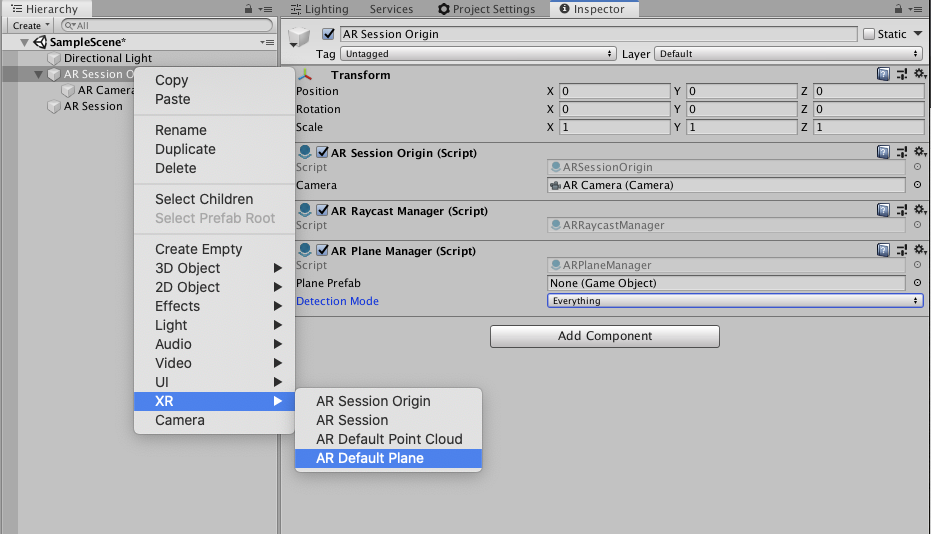

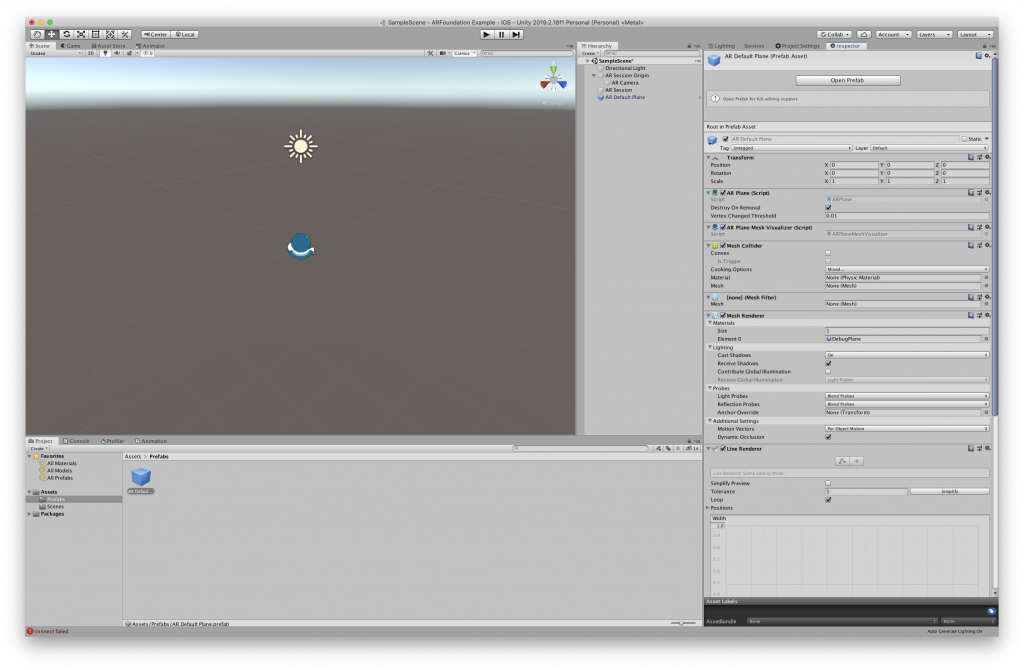

What we need is a 3D Plane object so that we show where a plane was detected. We can either create a custom mesh for our plane or just use a pre-made one, which is what we’ll do now. Right-click in the Hierarchy -> XR -> AR Default Plane. It will create a new AR Default Plane gameobject in the scene.

Next, If we want to instantiate this plane when a plane is detected we want this gameobject to be available to our AR Plane Manager. We usually create prefabs out of gameobjects, which allow us to configure and store the gameobject. To create a prefab, Create a new folder called Prefabs in the project window and simply drag and drop the AR Default Plane into the folder. The AR Default Plane gameobject can now be deleted from the hierarchy.

We can now then assign the AR Default Plane prefab to our Plane Prefab field of the AR Plane Manager component of the AR Session Origin gameobject by simply dragging and dropping it into the field.

That’s it! We can now detect planes! But wait first we have to build and deploy the app which is next.

Building the AR application

Player Settings

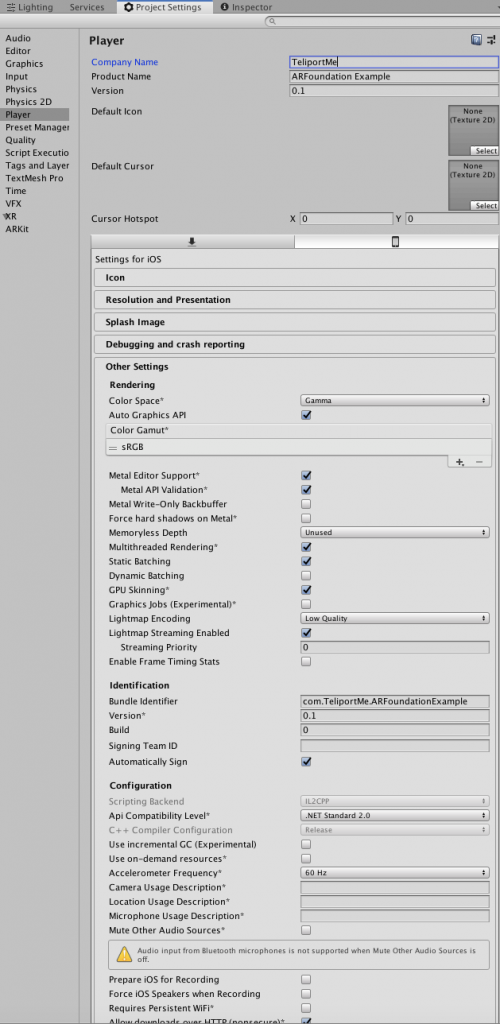

To build the app with Unity, first, we need to set up some properties for the App like Company Name, Package Name, etc., under Player Settings. Go to -> Edit -> Project Settings -> Player on the left side menu to open the PlayerSettings page.

Fill in your Company Name and the Product Name which will be the name of the App that will show up on your iOS device. Also, fill in the Bundle Identifier followed by a version and build number if you’d prefer.

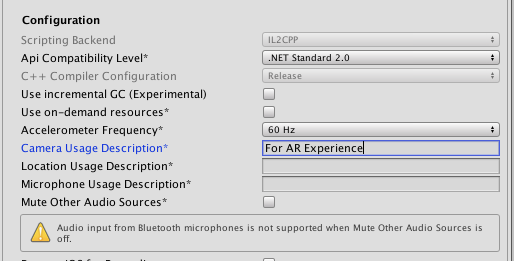

We also need to add a few more properties. First, let’s add the Camera Usage Description which is the text what will show up when the user is prompted for the Camera Access.

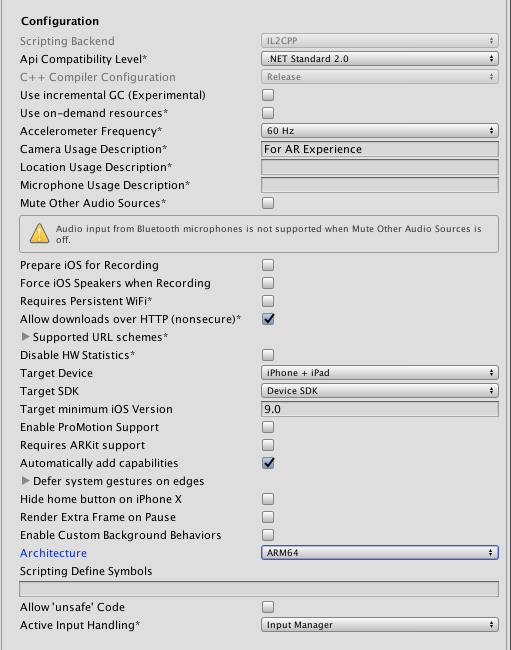

Next, set the Architecture to ARM64.

Now we can build our app. File -> Build Settings and click on Build and Run. What this does is builds the app, creates a new Xcode project, opens up Xcode and starts the Xcode build process and deploys the app to your connected iOS device. You will need to connect your iPhone/iPad now.

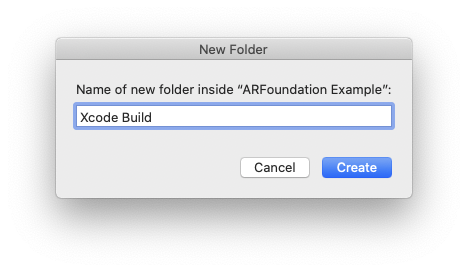

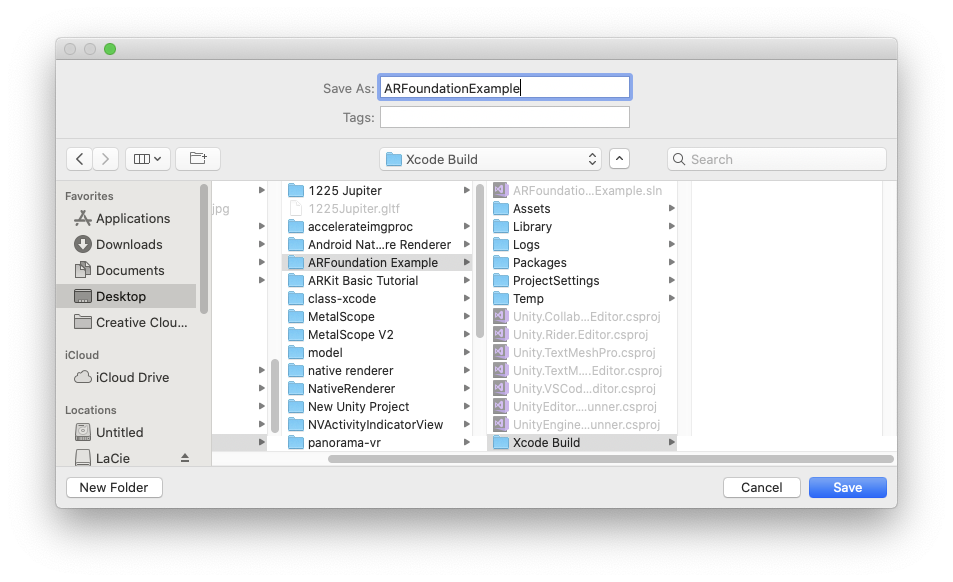

Unity will ask to create a folder to store the Xcode project in. Choose your desired location.

Unity will now start compiling and building the app. It should take a while and once done it should open up Xcode.

Installing to an iOS device using XCode

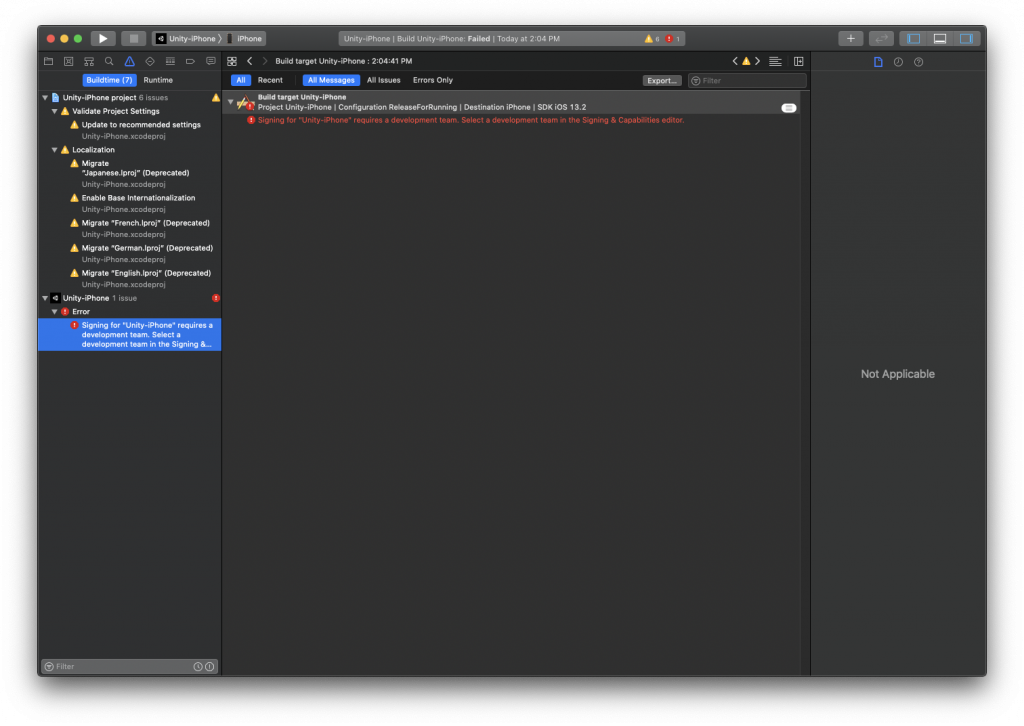

Coming to finally taking a build, you will need to have set up a provisioning profile with Xcode that is needed for building the app with Xcode. If this is done, Xcode should also start its build process automatically, install the app and launch it as well.

If you don’t have that done yet, Xcode build will fail.

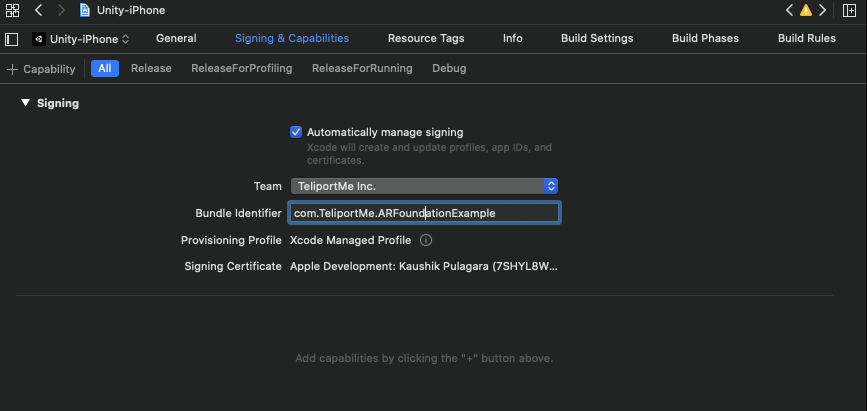

To fix this, click on the project folder view from the Navigation Bar-> select Unity-iPhone -> select Signing and Capabilities from the Main Window.

From there you can select your provisioning profile.

Finally, select your connected device and click on the Play icon in the Xcode toolbar to start the build process again.

Once successful, Xcode will install the app on your connected device and run it automatically.

That’s it!

Thanks for following along and please comment below if you have any queries or thoughts.

[…] this tutorial, we’ll take the previous project we used to create an ARKit specific app and we will show you how to add ARCore support to it, […]

[…] a previous tutorial here, we learned to build an AR App and identifying planes in our environment. In this article, we’ll […]

[…] a previous tutorial here, we built an AR app with plane tracking features using unity. The application detected both […]

Hi,

Thanks for this tutorial. Two questions:

How do I chose the provisioning profile? in my case it seems like i cant change it (can’t download nor import or at least i don’t know how or what).

How can I get the next tutorials? i signed up with my mail adress and confirmed the mail however i didn’t get any link or something.

thank you!

This is great! Thanks for breaking it down so well and clearly.