Day 9 : Face Tracking using ARKit and AR Foundation with Unity.

In this tutorial we will show you how to track your face with ARkit and ARfoundation. This can be used to create AR filters for your face something that Instagram and Snapchat have made famous.

This is part of a 30 day sprint where we try to publish 30 projects in 30 days, this means building full projects from scratch. Double checking the code, writing the tutorial and then posting it . If there are any typos do let us know and I hope you enjoy this tutorial and project.

Introduction

In the previous few tutorials, we have been learning about different trackables that are a part of the AR Foundation SDK. These leverage the features provided by ARKit and ARCore from their respective hardware and platforms. You can find all those articles here.

Unity has launched recently a newer updated version of AR Foundation which also works with ARKit 3 which brings in a plethora of new features. One of which is the Face tracking.

Face Tracking with ARKit and AR Foundation

ARKit now enables a revolutionary capability for robust face tracking in AR apps. ARKit can detect the position, topology, and expression of the user’s face, all with high accuracy and in real-time. It can also be used to drive a 3D character.

We can detect faces in a front-camera AR experience, overlay virtual content, and animate facial expressions in real-time. This requires a front depth camera and hence only works on the iPhone X, XS, XR, and the iPhone 11 series or the iPad Pro series.

There are some really fun things that you can do with Face Tracking. The first is selfie effects, where you’re rendering a semitransparent texture onto the face mesh for effects like a virtual tattoo, or face paint, or to apply makeup, growing a beard or a mustache, or overlaying the mesh with jewelry, masks, hats, and glasses. The second is face capture, where you are capturing the facial expression in real-time and using that as rigging to project expressions onto an avatar, or for a character in a game.

Usage with AR Foundation and Unity

The Face Tracking feature is exposed within AR Foundation as part of the Face Subsystem, similar to other subsystems. Events can be subscribed to that will be invoked when a new face has been detected. That event will provide you with basic information about the face including the pose – position and rotation of the face. We can also get more information about the face from the subsystem including the description of its mesh and the blendshape coefficients that describe the expression on that face. There are also events to subscribe to which allow you to detect when the face has been updated or when it has been removed.

Implementation

ARFoundation, as usual, provides some abstraction via the ARFaceManager component. This component can be added to an ARSessionOrigin in the scene, and it will create a copy of the “FacePrefab” prefab and add it to the scene as a child of the ARSessionOrigin when it detects a face. It will also update and remove the generated “Face” GameObjects as needed when the face is updated or removed respectively.

In this article, we’ll create an application that will detect and track the individual faces. We’ll apply a mask on the detected face to see how the tracking and features work.

This is what the end result will look like.

Getting Started

Prerequisites

- Unity 2019.2.18f1

- AR Foundation and ARKit setup from this tutorial here.

- One of the compatible devices mentioned above.

Updating AR Foundation and ARKit

If you’re coming from the previous tutorials first step is to update our SDKs to the recent version, using the PackageManager.

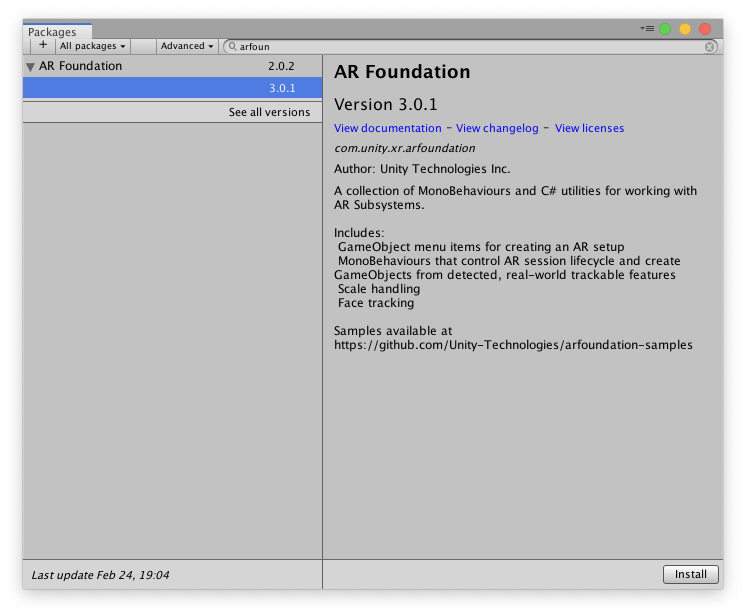

First, let’s update AR Foundation.

Go to Window -> Package Manager -> Search for AR Foundation in the search bar.

In the left panel, select AR Foundation and click on See all versions. Under that choose 3.0.1 and install it.

Unity will download and install it, it might take a few seconds.

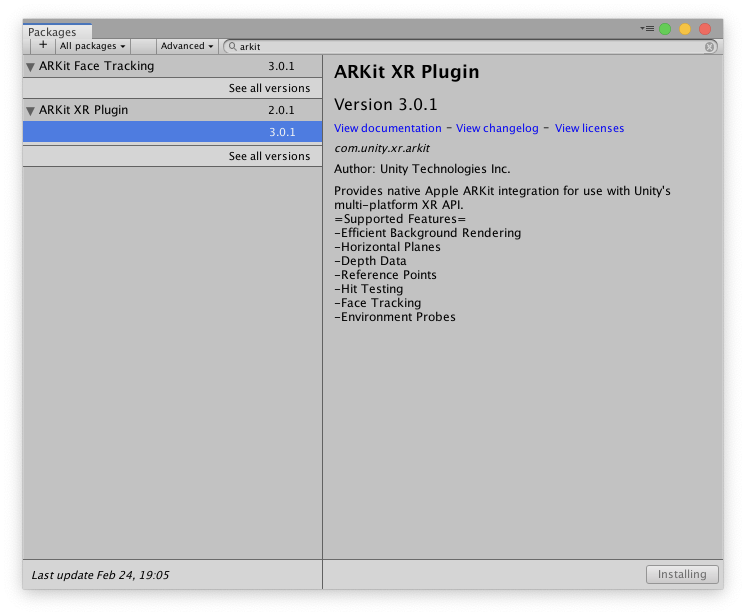

Once done, now search for AR Kit -> see all versions. -> 3.0.1 and install it.

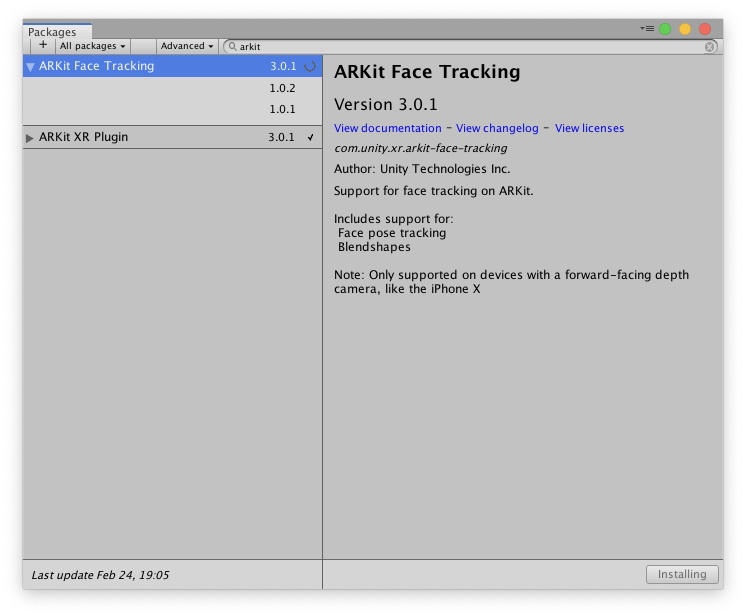

Next, search for AR Face Tracking -> 3.0.1 and install it.

Adding Face Tracking Systems

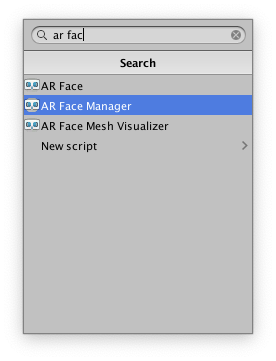

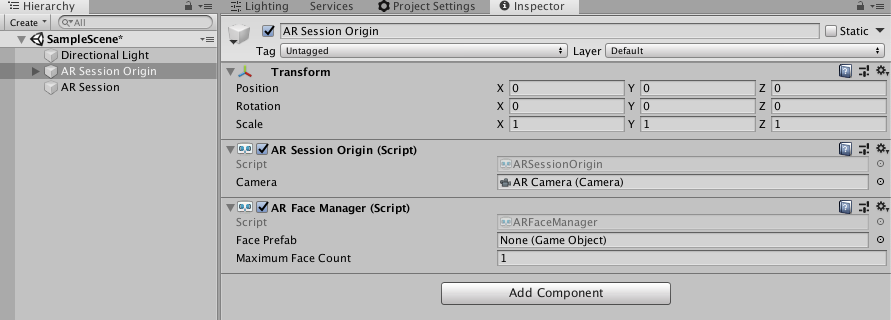

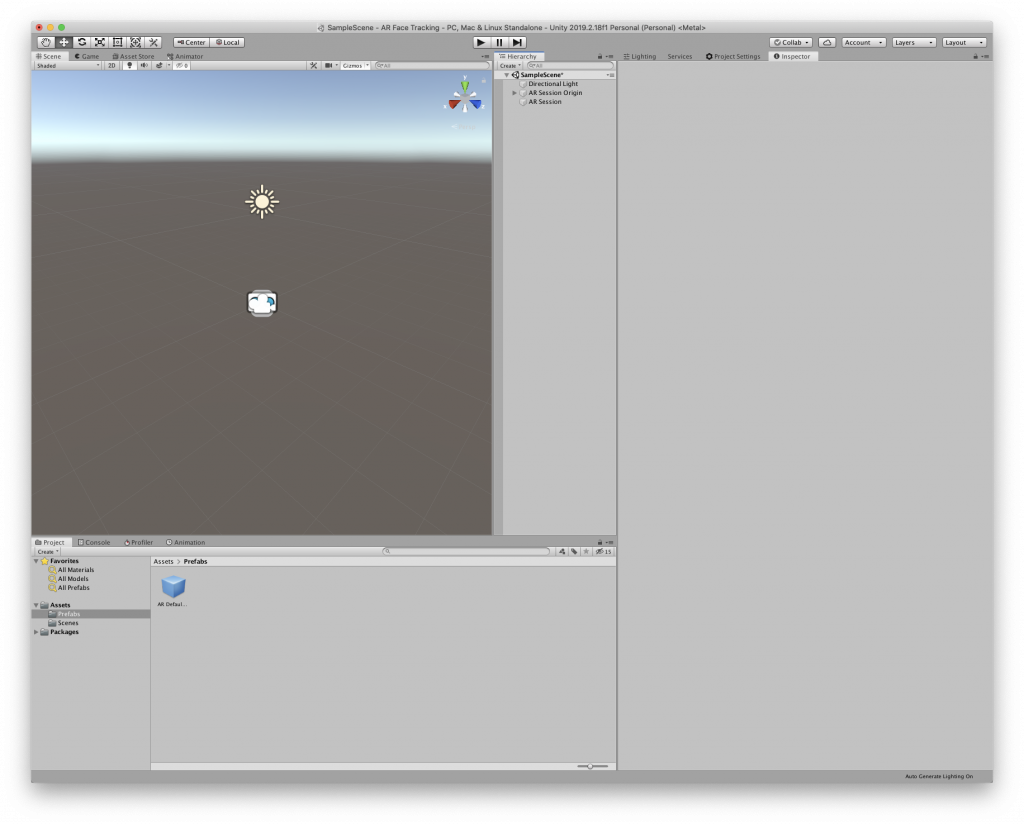

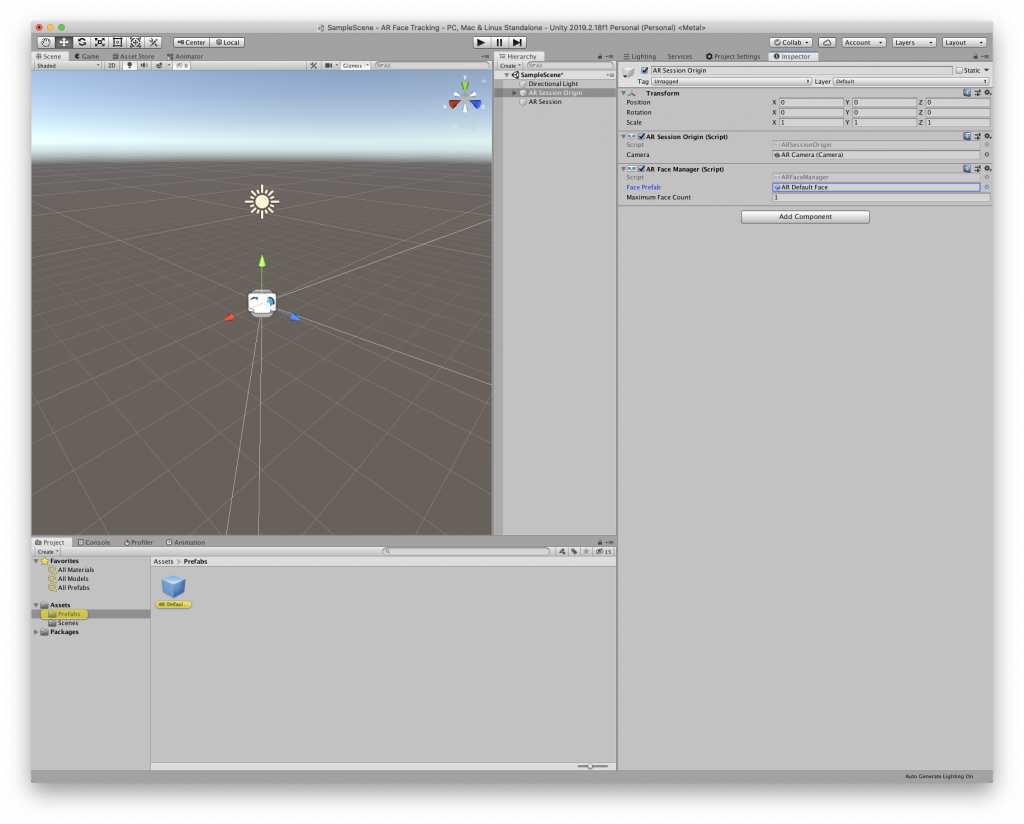

Next, on our AR Session Origin gameobject, select it and in the Inspector Window -> Add Component -> search for AR Face Manager.

Set the maximum face count to 1, it defines how many faces can be simultaneously detected. More taking more CPU resources.

Let’s now create our Face mesh prefab and assign it to our AR Face Manager’s Face Prefab property.

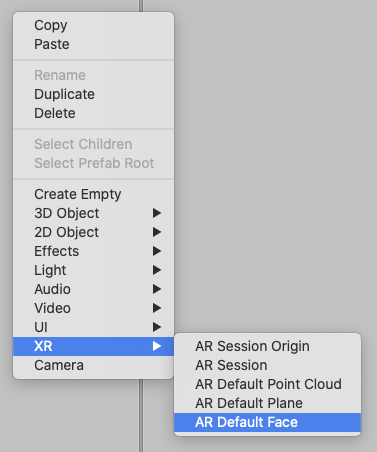

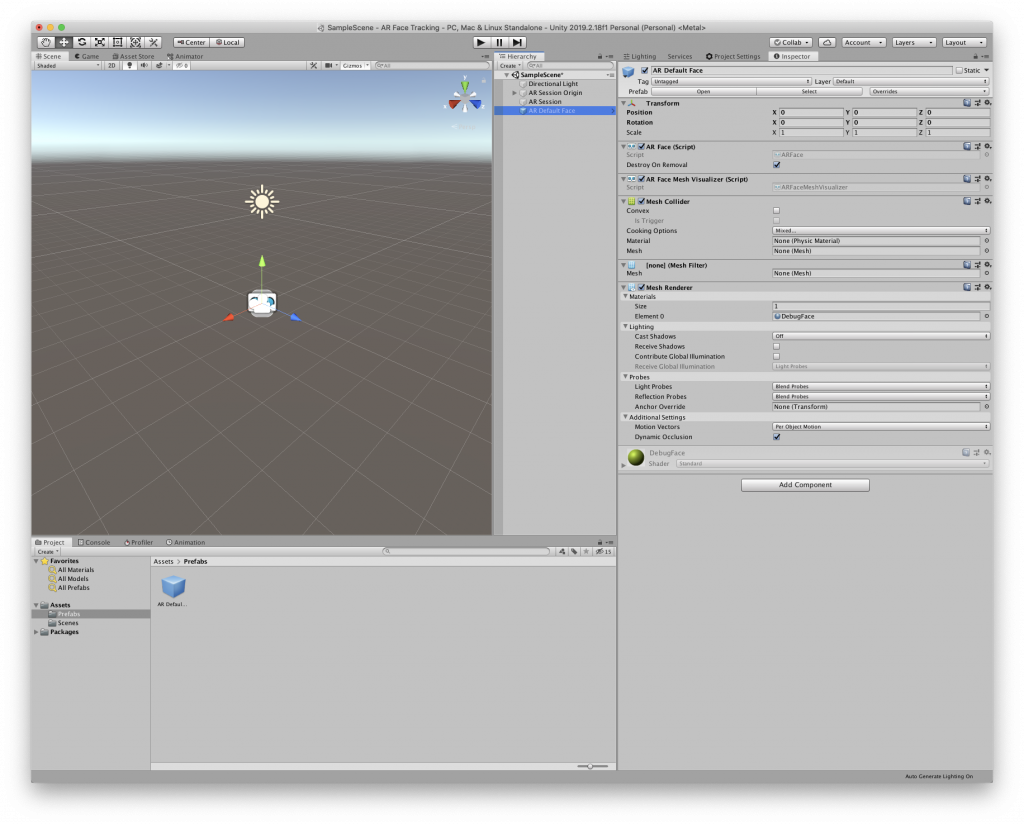

Right-click in the Hierarchy -> XR -> AR Default Face.

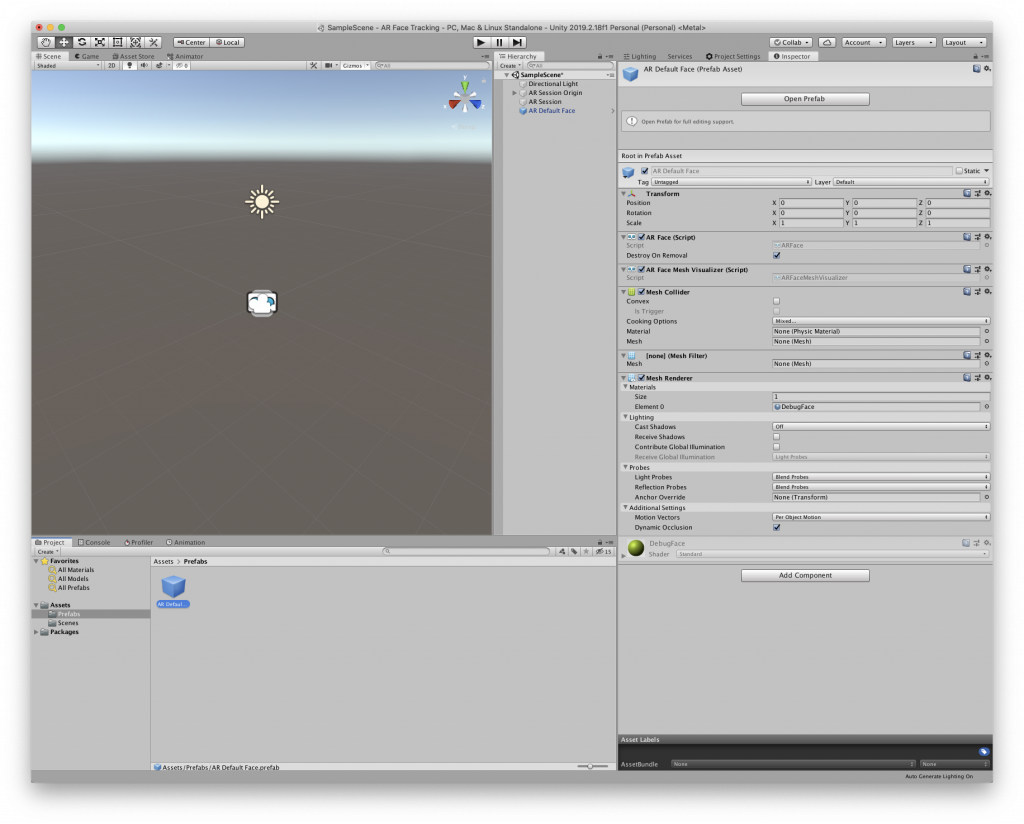

Drag and drop this gameobject into the Prefabs folder in the Project window to make a prefab out of it.

Now you can delete the AR Default Face from the Hierarchy.

Next, assign the prefab into the AR Face Manager’s Face Prefab property.

Project Settings

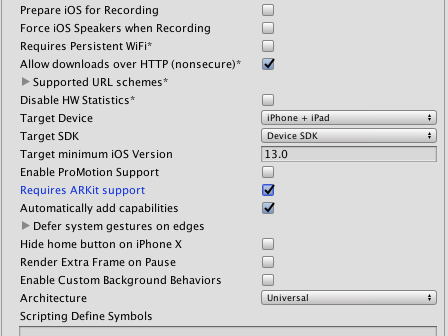

We need to make sure that our minimum iOS target version is iOS 13. Go to Player Settings and update it there.

That’s it. Now you can build the project and deploy it using Xcode to install it onto your device.

Thanks